At TripleCheck things are never stable nor pretty, same old news. However, data archival and search algorithms kept booming beyond expectations, both of them estimated to grow 10x over the next 12 months as we finally add up more computing power and storage. So, can't complain about that.

What I do complain today is about privacy. More specifically, the value of anonymity. One of the business models we envisioned for this technology is the capacity to break source code apart and discover who really wrote each part of the source code. We see it as a wonderful tool for plagiarism detection, but one of the remote scenarios also envisioned was uncovering the identity for hidden authors of malware.

Malware authorship detection had been an hypothesis. It made good sense to help catch these malware authors, the "bad guys" and bring them to justice when committing cyber-warfare. There hadn't been many chances to test this kind of power measuring over the past year because we are frankly too busy with other topics.

But today that changed. Was reading the news about an IoT malware spread into the wild, whose source code got published in order to maximize damage:

http://www.darknet.org.uk/2016/10/mirai-ddos-malware-source-code-leaked/

For those not understanding why someone makes malware code public on this context, it is because "script kiddies" will take that code, make changes and amplify its damaging reach. The author was anonymous and nowadays seems easy to just blame the Russians for every hack out there. So I said to myself: "let's see if we can find who really wrote the code".

Downloaded the source code for the original Mirai malware, which can be downloaded from: https://github.com/jgamblin/Mirai-Source-Code

Scanned the source code through the tool and started seeing plagiarism matches on the terminal.

What I wasn't expecting was that it generated such a clear list. In less than 10 minutes had already narrowed the matches to a single person on the Internet. For a start, he surely wasn't Russian. I've took the time to go deeper and see what he had been doing in previous years, previous projects and areas of interest. My impression is that he might feel disgruntled with "the system", specifically about the lack of security and privacy that exists nowadays. That this malware was his way of demonstrating to public that IoT can be too easily exploited and this is urgent to change.

And then I was sad.

This didn't looked like a "bad guy", he wasn't doing it for profit. This was a plain engineer like me. I could read his posts and see what he wrote about this lack of device security to no avail, nobody listened. Only when something bad happens, people listen. Myself couldn't care less about IoT malware until this exploit was out in the wild, so what he did worked.

If his identity would now be revealed, this might mean legal repercussions for an action that in essence is today forcing manufacturers to fix their known security holes (they wouldn't fix otherwise because it costs them extra money per device).

Can we really permit cases where after talking gets nothing done, only an exploit forces these fixes to happen in the future?

I don't know the answer. All that I know is that an engineer with possibly good intentions released the source code to fix a serious security hole before it would grow bigger (IoT devices grow every year). That person has published the code under the presumption of anonymity, which our tech is now be able to uncover and possibly bring damage to a likely good person and engineer.

TripleCheck GmbH

The news are official, TripleCheck is now a German company in full right.

It was already a registered company since 2013. But it was labelled as a "hobby" company because of the unfortunate UG (haftungsbeschränkt) tag for young companies in Germany.

You might be wondering at this point: "what is the fuss all about?"

In Germany, a normal company needs to be created with a minimum of 25 000 EUR on the company bank account. When you don't have that much money, you can start a company with a minimum of one Euro, or as much you can, but you get labelled as UG rather than GmbH.

In Germany, a normal company needs to be created with a minimum of 25 000 EUR on the company bank account. When you don't have that much money, you can start a company with a minimum of one Euro, or as much you can, but you get labelled as UG rather than GmbH.

When we first started the company, I couldn't care less about UG vs. GmbH kind of discussion. It was only when we first started interacting with potential customers in Germany that we understood the problem. Due to this UG label, your company is seen as unreliable. It soon became frequent to hear: "I'm not sure if you will be around in 12 months" and this came with other implications such as banks refusing to grant us a credit card linked to the company account.

Some people joke and observe that UG stands for "Untergrund", in the sense that this type of company has strong odds of not floating and going under the ground within some months. Sadly true.

You see, in theory a company can save enough money on the bank account until it reaches 25k EUR to then upgrade. In practice, we can make money but at the same time have servers, salaries and other heavy costs to pay. Moving the tick to 25k is quite a pain. Regardless of how many thousands of Euros are made and then spent across the year, that yearly flow of revenue does not count unless you have a screenshot showing the bank account above the magic 25k.

This month we finally broke that limitation.

No more excuses. We simply went way above that constraint and upgraded the company into full GmbH. Ironically, this only happened when our team moved temporarily out of Germany and is opening a new office elsewhere in Europe.

Finally a GmbH. Have to say that Germany in some instances is very unfriendly to startups. The UG situation reduces chances of a young startup to compete at the same level as a GmbH company, even when the technology is notoriously more advanced on the UG company.

As example, in the United Kingdom you can get a Ltd. company and you are in the same standing level as the large majority of companies. Ironically, we could have registered a Ltd. in the UK without money on the bank and then use this status in Germany to look "better" than a plain boring GmbH.

The second thing that bothers me are taxes. As UG we pay the same level of taxes as a GmbH in full. Whereas in the UK you get tax breaks when starting an innovative company. In fact, you get back 30% of your expenses with developers (any expense considered as R&D) in that country. From Germany we only got heavy invoices of tax bills to pay every month.

I'm happy that we are based in Germany. We struggled to survive and move up to GmbH as you can see. We carved our place in Darmstadt against the odds. But Germany, you are really losing your competitive edge when there exist so many advantages for Europeans to open up startups in UK rather than DE. Let's try to improve that, shall we? :-)

It was already a registered company since 2013. But it was labelled as a "hobby" company because of the unfortunate UG (haftungsbeschränkt) tag for young companies in Germany.

You might be wondering at this point: "what is the fuss all about?"

In Germany, a normal company needs to be created with a minimum of 25 000 EUR on the company bank account. When you don't have that much money, you can start a company with a minimum of one Euro, or as much you can, but you get labelled as UG rather than GmbH.

In Germany, a normal company needs to be created with a minimum of 25 000 EUR on the company bank account. When you don't have that much money, you can start a company with a minimum of one Euro, or as much you can, but you get labelled as UG rather than GmbH.When we first started the company, I couldn't care less about UG vs. GmbH kind of discussion. It was only when we first started interacting with potential customers in Germany that we understood the problem. Due to this UG label, your company is seen as unreliable. It soon became frequent to hear: "I'm not sure if you will be around in 12 months" and this came with other implications such as banks refusing to grant us a credit card linked to the company account.

Some people joke and observe that UG stands for "Untergrund", in the sense that this type of company has strong odds of not floating and going under the ground within some months. Sadly true.

You see, in theory a company can save enough money on the bank account until it reaches 25k EUR to then upgrade. In practice, we can make money but at the same time have servers, salaries and other heavy costs to pay. Moving the tick to 25k is quite a pain. Regardless of how many thousands of Euros are made and then spent across the year, that yearly flow of revenue does not count unless you have a screenshot showing the bank account above the magic 25k.

This month we finally broke that limitation.

No more excuses. We simply went way above that constraint and upgraded the company into full GmbH. Ironically, this only happened when our team moved temporarily out of Germany and is opening a new office elsewhere in Europe.

Finally a GmbH. Have to say that Germany in some instances is very unfriendly to startups. The UG situation reduces chances of a young startup to compete at the same level as a GmbH company, even when the technology is notoriously more advanced on the UG company.

As example, in the United Kingdom you can get a Ltd. company and you are in the same standing level as the large majority of companies. Ironically, we could have registered a Ltd. in the UK without money on the bank and then use this status in Germany to look "better" than a plain boring GmbH.

The second thing that bothers me are taxes. As UG we pay the same level of taxes as a GmbH in full. Whereas in the UK you get tax breaks when starting an innovative company. In fact, you get back 30% of your expenses with developers (any expense considered as R&D) in that country. From Germany we only got heavy invoices of tax bills to pay every month.

I'm happy that we are based in Germany. We struggled to survive and move up to GmbH as you can see. We carved our place in Darmstadt against the odds. But Germany, you are really losing your competitive edge when there exist so many advantages for Europeans to open up startups in UK rather than DE. Let's try to improve that, shall we? :-)

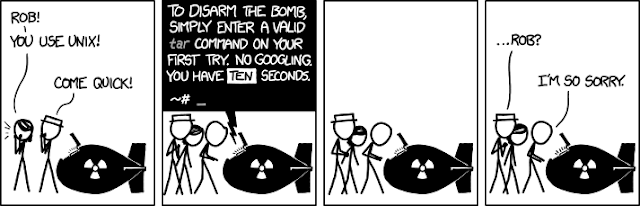

Intuitive design for command line switches

I use the command line.

It is easy. Gets things done in a straightforward manner. However, the design of the command line switches is an everyday problem. Even for the tooling that is used more often, one is never able to memorize totally the switches required for common day-to-day actions.

For example, want to rsync something?

rsync -avvzP user@location1 ./

Want to decompress some .tar file?

tar -xvf something.tar

The above is already not friendly but still more or less doable with practice. But now ask yourself (attention: google not allowed):

How do you find files with a given extension inside a folder and sub-folders?

You see, in Unix (Linux, Mac, etc) this is NOT easy. Just like so many other commands, a very common task was not designed with an intuitive usage in mind. They work, but in so much as you learn an encyclopedia of switches. Sure, there exist manual pages and google/stackoverflow to help but what happened to simplicity in design?

In Windows/ReactOS one would type:

dir *.txt /s

In Unix/Linux this is a top answer:

find ./ -type f -name "*.txt"

source: http://stackoverflow.com/a/5927391

Great. It is spread everywhere this kind of complication for everyday tasks. Want to install something, need to type:

apt-get install something

Since we only use apt-get for installing stuff, why not?

apt-get something

Whenever designing your next command line app. Would help end-users if you list which usage scenarios will be more popular and reduce to bare minimum the switches. Some people argue that switches deliver consistency and this is a fact. However, one should perhaps balance consistency with friendliness in mind, which in the end turns end-users into happy-users.

Be nice, keep it simple.

¯\_(ツ)_/¯

Question:

What is the weirdest Unix command that really upsets you?

Noteworthy reactions to this post:

- Defending Unix against simpler commands:

http://leancrew.com/all-this/2016/03/in-defense-of-unix

- This post ended stirring a fight of Linux > Windows

https://news.ycombinator.com/item?id=11229025

It is easy. Gets things done in a straightforward manner. However, the design of the command line switches is an everyday problem. Even for the tooling that is used more often, one is never able to memorize totally the switches required for common day-to-day actions.

For example, want to rsync something?

rsync -avvzP user@location1 ./

Want to decompress some .tar file?

tar -xvf something.tar

The above is already not friendly but still more or less doable with practice. But now ask yourself (attention: google not allowed):

How do you find files with a given extension inside a folder and sub-folders?

You see, in Unix (Linux, Mac, etc) this is NOT easy. Just like so many other commands, a very common task was not designed with an intuitive usage in mind. They work, but in so much as you learn an encyclopedia of switches. Sure, there exist manual pages and google/stackoverflow to help but what happened to simplicity in design?

In Windows/ReactOS one would type:

dir *.txt /s

In Unix/Linux this is a top answer:

find ./ -type f -name "*.txt"

source: http://stackoverflow.com/a/5927391

Great. It is spread everywhere this kind of complication for everyday tasks. Want to install something, need to type:

apt-get install something

Since we only use apt-get for installing stuff, why not?

apt-get something

Whenever designing your next command line app. Would help end-users if you list which usage scenarios will be more popular and reduce to bare minimum the switches. Some people argue that switches deliver consistency and this is a fact. However, one should perhaps balance consistency with friendliness in mind, which in the end turns end-users into happy-users.

Be nice, keep it simple.

¯\_(ツ)_/¯

Question:

What is the weirdest Unix command that really upsets you?

Noteworthy reactions to this post:

- Defending Unix against simpler commands:

http://leancrew.com/all-this/2016/03/in-defense-of-unix

- This post ended stirring a fight of Linux > Windows

https://news.ycombinator.com/item?id=11229025

How big was triplecheck data in 2015?

Last year was the first time that we started releasing the offline edition of our tooling for evaluating software originality. At the beginning this tool was released on a single terabyte USB drive. However, shipping the USB across normal post was difficult and the I/O speed that we could read data from the disk was peaking at 90Mbps, in turn this made scannings take way too long (defined as anything longer than 24 hours running).

As the year moved along, we kept reducing the disk space required for fingerprints and at the same time kept increasing the total number of fingerprints dispatched with each new edition.

In the end we managed to fit the basic originality data sets inside a special 240Gb USB thumb drive. When mentioning "special", I mean a drive containing two miniature SSD devices that are connected in hardware-based RAID 0 mode. For those unfamiliar with RAID, this means two disks working together and appearing on the surface as a single disk. The added advantage is reading data faster because you are physically reading from two disks and roughly doubling speed. Since it has no physical moving parts, the speed of the whole thing jumped to 300Mbps. My impression is that we didn't reach yet the peak speed for how fast data can be read from the device, our bottleneck simply moved to the CPU cores/software not being able to digest data faster. Due to contract reasons can't mention the thumb drive model, but this is a device in the range of $500 to $900. Certainly worth the price when scanning gets completed faster.

Another multiplier to high-speed and data size was compression. Tests were made to find a compression algorithm that wouldn't need much CPU to decompress and at the same time would reduce disk space. We settled for plain zip compression since it consumed minimal CPU and resulted in a good-enough ratio of 5:1. Meaning that if something was using 5Gb before, now it was only using 1Gb of disk space.

There is an added advantage to this technique besides disk space: now we were able of reading the same data almost 5x faster than before. If before we needed to read 5Gb from the disk, now this requirement got reduced to 1Gb for accessing the same data (discounting CPU load). It then became possible to fit 1Tb of data inside a 240Gb drive, reducing by 4x the needed disk space, while increasing speed by 3x with the same data.

All this comes to the question: How big was triplecheck data last year?

These are the raw numbers:

One billion individual fingerprints for binary files were included. 500 million (50%) of these fingerprints are source code files in 54 different programming languages. Around 15% of these fingerprints are related to artwork and this means icons, png, jpg files. The other files are usually included with software projects, things like .txt documents and such.

Over the year we kept adding snippet detection capabilities to mainstream programming languages. This means the majority of C-based dialects, Java, Python and PHP. On the portable offline edition we were unable to include the full C collection, it was simply too big and there wasn't much demand from customers to have it included (with only one notable customer exception across the year). In terms of qualified individual snippets we are tracking a total of 700 million across 150 million source code files. A qualified snippet is one that contains valid enough logical instructions. We use a metric called "diversity", meaning that a snippet is only accepted when it has a given percentage of logical commands inside. For example: a long switch or IF statement without other relevant code is simply ignored because this is not typically relevant from an originality point of view.

The body of data was built from relevant source code repositories available to public and a selection of websites such as fora, mailing lists and social networks. We are being picky about which files to include on the offline edition and only accept around 300 specific types of files. The collected raw data during 2015 went above 3 trillion binary files and much effort was applied to iterate this archive within weeks instead of months to build relevant fingerprint indexes.

For 2016 the challenge continues. There is a data explosion ongoing. We notice a 200% growth between 2014 and 2015, albeit this might be caused due to our own techniques for gathering data to have improved and no longer being limited by disk space as when first started in 2014. More interesting is remembering that the NIST fingerprints index had a relevant compendium of 20 million fingerprints in 2011 and that now we need technology to handle 50x as much data.

So let's see. This year I think we'll be using the newer models with 512Gb. A big question mark is if we can somehow squeeze more performance by using the built-in GPU that you find on modern computers today. Albeit this is new territory for our context and doesn't exist certainty that moving data between disk, CPU and GPU will bring added performance or be worth the investment. The computation is already light as it is, and not particularly suited (IMHO) for GPU type of processing.

The other field to explore is image recognition. We have one of the biggest archives of miniature artwork (icons and such) that you would find applied in software. There exist cases where the same icon is saved under different formats and right now we are not detecting such cases. The second doubt is if we should pursue this kind of detection because it is a necessary thing (albeit having no doubt it is a cool thing, thought). What I'm sure is that we already doubled the archive compared to last year and that soon we'll be creating new fingerprint indexes. Again starts the optimization to keep speed acceptable. Oh well, data everywhere. :-)

As the year moved along, we kept reducing the disk space required for fingerprints and at the same time kept increasing the total number of fingerprints dispatched with each new edition.

In the end we managed to fit the basic originality data sets inside a special 240Gb USB thumb drive. When mentioning "special", I mean a drive containing two miniature SSD devices that are connected in hardware-based RAID 0 mode. For those unfamiliar with RAID, this means two disks working together and appearing on the surface as a single disk. The added advantage is reading data faster because you are physically reading from two disks and roughly doubling speed. Since it has no physical moving parts, the speed of the whole thing jumped to 300Mbps. My impression is that we didn't reach yet the peak speed for how fast data can be read from the device, our bottleneck simply moved to the CPU cores/software not being able to digest data faster. Due to contract reasons can't mention the thumb drive model, but this is a device in the range of $500 to $900. Certainly worth the price when scanning gets completed faster.

Another multiplier to high-speed and data size was compression. Tests were made to find a compression algorithm that wouldn't need much CPU to decompress and at the same time would reduce disk space. We settled for plain zip compression since it consumed minimal CPU and resulted in a good-enough ratio of 5:1. Meaning that if something was using 5Gb before, now it was only using 1Gb of disk space.

There is an added advantage to this technique besides disk space: now we were able of reading the same data almost 5x faster than before. If before we needed to read 5Gb from the disk, now this requirement got reduced to 1Gb for accessing the same data (discounting CPU load). It then became possible to fit 1Tb of data inside a 240Gb drive, reducing by 4x the needed disk space, while increasing speed by 3x with the same data.

All this comes to the question: How big was triplecheck data last year?

These are the raw numbers:

source files: 519,276,706

artwork files: 157,988,763

other files: 326,038,826

total files: 1,003,304,295

snippet files: 149,843,377

snippets: 774,544,948

jsp: 892,761

cpp: 161,198,956

ctp: 19,708

ino: 41,808

c: 54,797,323

nxc: 324

hh: 20,261

tcc: 27,974

j: 2,190

hxx: 446,002

rpy: 2,457

cu: 17,757

inl: 337,850

cs: 26,457,501

jav: 1,780

cxx: 548,553

py: 189,340,451

php: 229,098,401

java: 94,896,020

hpp: 6,481,794

cc: 9,915,077

snippet size real: 255 Gb

snippet size compressed: 48 Gb

One billion individual fingerprints for binary files were included. 500 million (50%) of these fingerprints are source code files in 54 different programming languages. Around 15% of these fingerprints are related to artwork and this means icons, png, jpg files. The other files are usually included with software projects, things like .txt documents and such.

Over the year we kept adding snippet detection capabilities to mainstream programming languages. This means the majority of C-based dialects, Java, Python and PHP. On the portable offline edition we were unable to include the full C collection, it was simply too big and there wasn't much demand from customers to have it included (with only one notable customer exception across the year). In terms of qualified individual snippets we are tracking a total of 700 million across 150 million source code files. A qualified snippet is one that contains valid enough logical instructions. We use a metric called "diversity", meaning that a snippet is only accepted when it has a given percentage of logical commands inside. For example: a long switch or IF statement without other relevant code is simply ignored because this is not typically relevant from an originality point of view.

The body of data was built from relevant source code repositories available to public and a selection of websites such as fora, mailing lists and social networks. We are being picky about which files to include on the offline edition and only accept around 300 specific types of files. The collected raw data during 2015 went above 3 trillion binary files and much effort was applied to iterate this archive within weeks instead of months to build relevant fingerprint indexes.

For 2016 the challenge continues. There is a data explosion ongoing. We notice a 200% growth between 2014 and 2015, albeit this might be caused due to our own techniques for gathering data to have improved and no longer being limited by disk space as when first started in 2014. More interesting is remembering that the NIST fingerprints index had a relevant compendium of 20 million fingerprints in 2011 and that now we need technology to handle 50x as much data.

So let's see. This year I think we'll be using the newer models with 512Gb. A big question mark is if we can somehow squeeze more performance by using the built-in GPU that you find on modern computers today. Albeit this is new territory for our context and doesn't exist certainty that moving data between disk, CPU and GPU will bring added performance or be worth the investment. The computation is already light as it is, and not particularly suited (IMHO) for GPU type of processing.

The other field to explore is image recognition. We have one of the biggest archives of miniature artwork (icons and such) that you would find applied in software. There exist cases where the same icon is saved under different formats and right now we are not detecting such cases. The second doubt is if we should pursue this kind of detection because it is a necessary thing (albeit having no doubt it is a cool thing, thought). What I'm sure is that we already doubled the archive compared to last year and that soon we'll be creating new fingerprint indexes. Again starts the optimization to keep speed acceptable. Oh well, data everywhere. :-)

2016

The last twelve months did not pass fast. It was a long year..

Family-wise changed. Some got affected by Alzheimer, others display old age all too early. My own mom got surgery for two different cancer cases and a foot surgery. My grandma of 80 y.o. broke a leg which is a problem in her age. In worse cases family members passed away. Too much, too often, too quick. I've tried to be present, to support the treatment expenses and somehow, just somehow help. Sadder events were the death of my wife's father. Happened over night just before Christmas, just too quick and unexpected. Also sad was the earlier death of our house pet dog, which was part of the family for a whooping 17 years. Was sad to see our old dog put to final sleep. He was in constant pain, couldn't even walk any more. Will miss our daily walks on the park that happened three times a day regardless of snow or summer. We'd just get out on the street for fresh air so he could do his own business. Many times enjoyed the sun outside the office thanks to him, still grateful for these good moments. A great moment in 2015 was the birth of my second son. A strong and healthy boy. Nostalgia when remembering the happy moment when my first child got born back in 2008. In the meanwhile since that year almost everything changed, especially maturity-wise. In 2009 I've made the world familiar to me fall apart and yet to this day feel sad about my own decisions that eventually broke the first marriage. I can't change the past, but I can learn, work and aim to become a better father for my children. This is what I mean about maturity, do that extra mile to balance family and professional activities. It is sometimes crazy but somehow there must be balance. This year had the first proper family vacations since 5 years, which consisted on two weeks at a mountain lake. No phone, no Internet. Had to walk a kilometer on foot to get some WiFi on the phone at night. This summer we were talking with investors and communication was crucial so the idea of vacations seemed crazy. In the end, family was given preference and after summer we didn't went forward with investors in either case. Quality time with family was what really mattered, lesson learned.

Tech-wise we did the impossible, repeatably. If by December 2014 we had an archive with a trillion binary files and struggled hard on how to handle the already gathered data, by the end of 2015 was estimated that we had 3x as much data now stored. Not only the availability of open data grew exponentially, we also kept adding new sources of data before it would vanish. If before we were targeting some 30 types of source code files related to mainstream programming languages, now we target around 400 different types of binary formats. In fact, we don't even target just files. At current day we see relevant data extracted from blogs, forum sites, mailing lists. I mention an estimation of data because only in February we'll likely be able to pause and compute rigorous metrics. There was an informal challenge at DARPA to account the number of source code lines that are publicly available to humanity in current times, we might be able to report back a 10^6 growth compared to an older census. Having many files and handling that much data with very limited resources is one part of the equation that we (fortunately) had already solved back in 2014. The main challenge for 2015 was how to find the needles of relevant information inside a large haystack of public source code within a reasonable time. Even worse, how to enable end-users (customers) to find these needles by themselves inside the haystack through their laptops in offline manner, without a server farm somewhere (privacy). However, we did managed to get the whole thing working. Fast. The critical test was a customer with over 10 million LOC in different languages, written for the past 15 years. We were in doubt about such a large code base. But running the triplecheck tooling from a normal i7 laptop to crunch the matches required only 4 days, compared to 11 days when compared to other tools with smaller databases. That was a few months ago, in 2016 we are aiming to reduce this value down to a single day of processing (or less). Impossible is only impossible until someone else makes it possible. Don't listen to naysayers, just take as many steps as you need to go up a mountain, no matter how big it might be.

Business-wise was quite a ride. The top worst decisions (mea culpa) in 2015 was pitching our company at a European-wide venture capital event and trusting completely on outsourced sales without preparation for either. The first decision wasn't initially bad. We went there and got 7 investors interested in follow-up meetings. Very honest about where the money would be used, along with expected growth. However, the cliché that engineers are not good at business might be accurate. Investors speak a different language, there was disappointment for both sides. This initiative costed our side thousands of euros in travel and material costs, along with 4 months of stalled development. Worse was believing that the outsourced team could deliver sales (without being asked for a proof or test beforehand). Investors can invest without proof of revenue, but when someone goes to market then they want to wait and see how it performs. In our case, it didn't perform. Many months later we had paid thousands of EUR to the outsourced company and had zero product revenue to account from them. Felt like a complete fool for permitting this to happen and not putting a brake earlier. The only thing saving the company at this point was our business angel. Thanks to his support we kept getting new clients for the consulting activities. Majority of these clients became recurring M&A customers, this is what kept the company floating. Can never thank him enough, a true business angel in the literal sense of the expression. By October, the dust from outsourcing and investing were gone. Now existed certainty that we want to build a business and not a speculative startup. We finally got product sales moving forward by bringing aboard a veteran on this kind of challenge. For a start, no more giving our tools away for free during trial phase. I was skeptic but it worked well because this filtered our attention for companies that would pay upfront a pilot test. This made customers take the trial phase seriously since it had a real cost paid by them. The second thing was to stop using powerpoints during meetings. I prepare slides before customer meetings but this is counter-productive. More often than not, customers couldn't care less about what we do. Surprisingly enough they care about what they do and how to get their own problems solved. :-) Today exists a focus on hearing more than speaking at such meetings. Those two simple changes made quite a difference.

So, that's the recap from last year. Forward we move. :-)

Family-wise changed. Some got affected by Alzheimer, others display old age all too early. My own mom got surgery for two different cancer cases and a foot surgery. My grandma of 80 y.o. broke a leg which is a problem in her age. In worse cases family members passed away. Too much, too often, too quick. I've tried to be present, to support the treatment expenses and somehow, just somehow help. Sadder events were the death of my wife's father. Happened over night just before Christmas, just too quick and unexpected. Also sad was the earlier death of our house pet dog, which was part of the family for a whooping 17 years. Was sad to see our old dog put to final sleep. He was in constant pain, couldn't even walk any more. Will miss our daily walks on the park that happened three times a day regardless of snow or summer. We'd just get out on the street for fresh air so he could do his own business. Many times enjoyed the sun outside the office thanks to him, still grateful for these good moments. A great moment in 2015 was the birth of my second son. A strong and healthy boy. Nostalgia when remembering the happy moment when my first child got born back in 2008. In the meanwhile since that year almost everything changed, especially maturity-wise. In 2009 I've made the world familiar to me fall apart and yet to this day feel sad about my own decisions that eventually broke the first marriage. I can't change the past, but I can learn, work and aim to become a better father for my children. This is what I mean about maturity, do that extra mile to balance family and professional activities. It is sometimes crazy but somehow there must be balance. This year had the first proper family vacations since 5 years, which consisted on two weeks at a mountain lake. No phone, no Internet. Had to walk a kilometer on foot to get some WiFi on the phone at night. This summer we were talking with investors and communication was crucial so the idea of vacations seemed crazy. In the end, family was given preference and after summer we didn't went forward with investors in either case. Quality time with family was what really mattered, lesson learned.

Tech-wise we did the impossible, repeatably. If by December 2014 we had an archive with a trillion binary files and struggled hard on how to handle the already gathered data, by the end of 2015 was estimated that we had 3x as much data now stored. Not only the availability of open data grew exponentially, we also kept adding new sources of data before it would vanish. If before we were targeting some 30 types of source code files related to mainstream programming languages, now we target around 400 different types of binary formats. In fact, we don't even target just files. At current day we see relevant data extracted from blogs, forum sites, mailing lists. I mention an estimation of data because only in February we'll likely be able to pause and compute rigorous metrics. There was an informal challenge at DARPA to account the number of source code lines that are publicly available to humanity in current times, we might be able to report back a 10^6 growth compared to an older census. Having many files and handling that much data with very limited resources is one part of the equation that we (fortunately) had already solved back in 2014. The main challenge for 2015 was how to find the needles of relevant information inside a large haystack of public source code within a reasonable time. Even worse, how to enable end-users (customers) to find these needles by themselves inside the haystack through their laptops in offline manner, without a server farm somewhere (privacy). However, we did managed to get the whole thing working. Fast. The critical test was a customer with over 10 million LOC in different languages, written for the past 15 years. We were in doubt about such a large code base. But running the triplecheck tooling from a normal i7 laptop to crunch the matches required only 4 days, compared to 11 days when compared to other tools with smaller databases. That was a few months ago, in 2016 we are aiming to reduce this value down to a single day of processing (or less). Impossible is only impossible until someone else makes it possible. Don't listen to naysayers, just take as many steps as you need to go up a mountain, no matter how big it might be.

Business-wise was quite a ride. The top worst decisions (mea culpa) in 2015 was pitching our company at a European-wide venture capital event and trusting completely on outsourced sales without preparation for either. The first decision wasn't initially bad. We went there and got 7 investors interested in follow-up meetings. Very honest about where the money would be used, along with expected growth. However, the cliché that engineers are not good at business might be accurate. Investors speak a different language, there was disappointment for both sides. This initiative costed our side thousands of euros in travel and material costs, along with 4 months of stalled development. Worse was believing that the outsourced team could deliver sales (without being asked for a proof or test beforehand). Investors can invest without proof of revenue, but when someone goes to market then they want to wait and see how it performs. In our case, it didn't perform. Many months later we had paid thousands of EUR to the outsourced company and had zero product revenue to account from them. Felt like a complete fool for permitting this to happen and not putting a brake earlier. The only thing saving the company at this point was our business angel. Thanks to his support we kept getting new clients for the consulting activities. Majority of these clients became recurring M&A customers, this is what kept the company floating. Can never thank him enough, a true business angel in the literal sense of the expression. By October, the dust from outsourcing and investing were gone. Now existed certainty that we want to build a business and not a speculative startup. We finally got product sales moving forward by bringing aboard a veteran on this kind of challenge. For a start, no more giving our tools away for free during trial phase. I was skeptic but it worked well because this filtered our attention for companies that would pay upfront a pilot test. This made customers take the trial phase seriously since it had a real cost paid by them. The second thing was to stop using powerpoints during meetings. I prepare slides before customer meetings but this is counter-productive. More often than not, customers couldn't care less about what we do. Surprisingly enough they care about what they do and how to get their own problems solved. :-) Today exists a focus on hearing more than speaking at such meetings. Those two simple changes made quite a difference.

So, that's the recap from last year. Forward we move. :-)

Subscribe to:

Posts (Atom)