I've noted that each month has been entertaining but this one (August) is passing over the "busyness" level.

Some months ago we set as goal to prepare the company for the processing of open source code in a larger scale. It was no longer enough to just develop the tooling for discovery and marking the licenses of open source files, we now required our own knowledge base to improve the detection techniques.

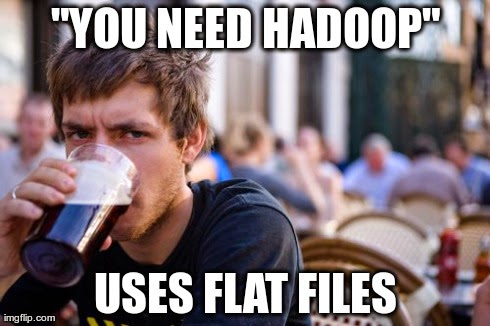

To make this possible, we would then require an infrastructure capable of handling files in larger scale. "You need hadoop" they said. But our resources are scarce. The company income is barely enough to cover a salary. Scaling our technology reach to tackle millions of files seemed a long shot when considering what we have at hand.

But it wasn't.

I remember with a smile the Portuguese proverb: "Quem não tem cão, caça com gato" (in English: Those without a dog, hunt with a cat). And so was the case.

No money for server farms. No Amazon cloud processing, no distributed storage. No teams of engineers, scientists and mathematicians using Hadoop and other big data buzz. No time for peer review nor academic research. No public funding.

And perhaps it was better this way.

It is a lonely and daunting task to write something that involves parallel operations, files with hundreds of gigabytes and an ever closer deadline to deliver a working solution.

However, it does force a person to find a way through the mess. To look at what really matters, to simply say "no" and keep focus. It is a lonely task but at the same time you're left to decide without delay. To experiment without fear and motivated for each day getting a bit closer to the intended goal.

So was the case. What seemed difficult for a single person to implement, was split into pieces that together built an infrastructure. I always keep close to mind the wise saying by a professor at CMU: "You don't know what you don't know". Too often the working solution differed from the planned solution. Very true indeed!

To some point I'm happy. We got things working very fast with a simplicity that anyone can maintain. Several hundred millions files processed in a month, over a hundred million stored in our archive and now moving to the final stage at fast pace.

Turned up that we didn't needed a large team, nor a computer farm, nor the full stack of big data buzz. Surely they are relevant. Surely in our case they were non-affordable, simpler solutions got things working.

TL;DR:

If a big data challenge can be broken into smaller pieces, go for it.

No comments:

Post a Comment